Justice served? Discrimination in algorithmic risk assessment

In our burgeoning world of information technology, algorithms are at the heart of modern life. They are mathematical calculations in which ‘inputs’ are turned into ‘outputs’. Algorithms can predict answers to conundrums such as which stocks will increase or decrease in value; the most lucrative time to post an advert on social media; which film is going to be the blockbuster of the summer; even the likelihood of an offender committing another crime in the future. For the latter scenario, algorithmic risk assessment tools have been used in a variety of criminal justice decisions, assessing data such as an offender’s criminal history, education, employment, drug use and mental health, then predicting the likelihood that that person will reoffend. It’s a common assumption that past behaviour can dictate an individual’s likely future conduct – the phrase ‘once a criminal, always a criminal’ is an oversimplification of this idea.

Weighing up the costs

Imprisonment is a costly affair and can often trap low-risk offenders in a vicious cycle of crime. Criminal justice officials strive to avoid over-reliance on imprisonment and reduce costs, while at the same time protecting individual liberty and public safety. Algorithmic risk assessment is one method that has been used, particularly a tool named COMPAS – an acronym for Correctional Offender Management Profiling for Alternative Sanctions. Its reoffending risk scales assess age, criminal history, employment history, drug problems, and vocational/educational problems, such as grades and suspensions. More recently these tools have been used in pretrial settings, offering a substitute to dependence on monetary bail. However, use of these tools has historically put poor and minority defendants at a disadvantage.

Prior to the rise of risk assessment algorithms like COMPAS, risk predictions were generally based on gut instinct, or the personal experience of the decision-making official. Now, thanks to advances in behavioural sciences, the arrival of big data, and improvements in statistical modelling, a new wave of algorithmic risk assessment tools has taken much of the burden from humans in making such weighty decisions.

While algorithmic risk assessment tools like COMPAS have helped criminal justice officials achieve cost-effective solutions while still protecting the public, there are certain unexpected consequences that arise from reliance upon criminal history in determining an offender’s future. This is something Dr Melissa Hamilton is investigating in her research at the University of Surrey.

Depositphotos.com

Lifting the veil

Dr Hamilton claims that while there are benefits to using automated risk assessment in criminal justice cases, they should be subject to more scrutiny: “The guise of science unwittingly convinces many that actuarial scales allow us to accurately and precisely predict the imminently unpredictable – human behaviour. The nonpartisan qualities of numbers and statistics can be both seductive, allowing decision makers to feel that risk can be corralled, and powerful, seemingly insulating criminal justice decisions in a veil of science.”

COMPAS overpredicts the risk for women to reoffend, therefore leading to unfair sentencing of female offenders.

This ‘veil’ has been lifted by civil rights groups and data scientists. In 2016 an investigative report by ProPublica called into question the objectivity and fairness of algorithmic risk assessment in predicting future criminality. Their study looked at the data over 7,000 arrestees scored on COMPAS in a pretrial setting in a southern county of Florida. Its findings concluded that the popular risk assessment tool COMPAS discriminates against Blacks because its algorithm produces a much higher false positive rate for Blacks than Whites, meaning that it overpredicts high risk of reoffending for Blacks.

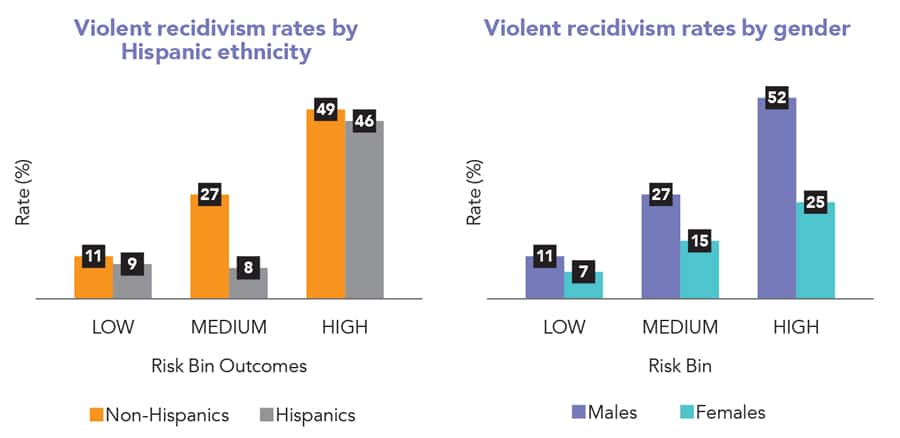

Dr Hamilton investigated this potential for racial bias further in her own study looking at COMPAS’ assessment of Hispanics and discovered similar discriminatory results. More recently she has pushed the question of algorithmic fairness into the gender arena, looking at how COMPAS discriminates against female offenders. This is an issue that has been rarely investigated in algorithmic justice research but, like racial bias, it has disturbing consequences for offenders.

A question of gender

Studies confirm that women are far less likely than men to commit new crimes. Indeed, a new study in the United States found that male gender is significantly correlated with reoffending. Moreover, the study reveals that gender is a stronger predictor of reoffending than other variables commonly included in algorithmic risk tools. The obvious question, then, is whether these tools properly recognise this gender-based disparity.

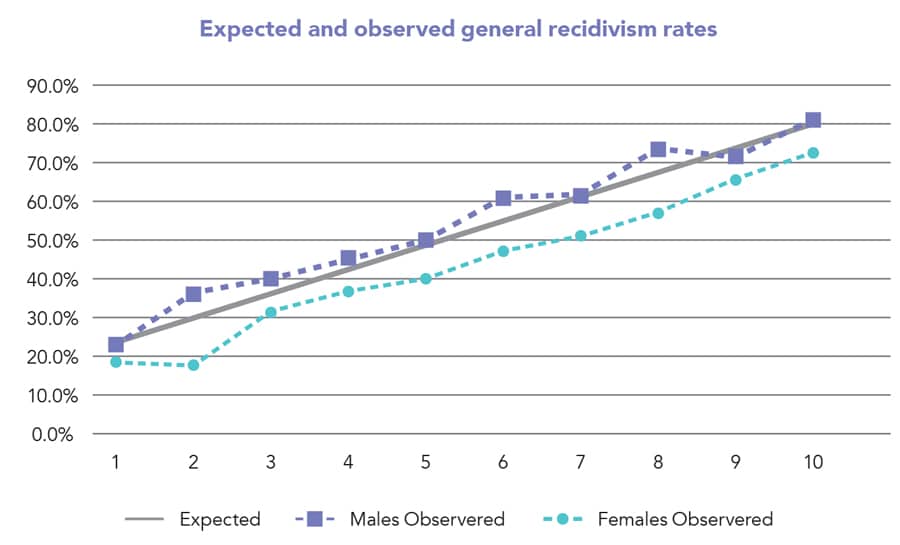

For the purposes of her study, Dr Hamilton analysed the results of 6,172 arrestees in Broward County in Florida scored on the COMPAS general recidivism risk scale soon after their arrest in 2013 and 2014. Prior to this study, a validation study in 2010 for general reoffending showed that males reoffended at far higher rates than females. Despite these findings, Broward County has continued to use COMPAS scales that are not gender-specific, despite a tool being available specifically attuned to women. Dr Hamilton’s research shows a similar outcome, proving that the tool overpredicts the risk for women to reoffend, therefore leading to unfair penalties for female offenders. Her study also looked at the risk assessment of violent reoffending, with results showing that the rate for women rated as high risk was less than half that of men labelled high risk (25% compared to 52%).

So, what are the reasons for this over-prediction? According to Dr Hamilton, an explanation is that the algorithm was trained mostly on male samples, and that it failed to include female-sensitive factors, such as the role of trauma, prior sexual victimisation, personal relationship instability, and parental stress. Dr Hamilton states that it remains an open question as to whether gender, race or ethnicity can expressly be included in risk tools from legal and ethical perspectives. What is clear is that by not using gender-sensitive algorithms, the criminal justice system disserves women, and disserves the algorithm as its predictive ability is poorer as a result.

COMPAS overpredicts the risk for women to reoffend, therefore leading to unfair sentencing of female offenders.

It’s clear that while algorithmic assessment scales have earned their praise as progressive, scientifically informed, sophisticated tools, they can have a disparate impact on low-risk offenders in general as well as minorities and women, subjecting them to overly harsh penalties.

Unfair scoring

Other flaws Dr Hamilton highlights in related criminal justice studies include the tendency for risk tools to count the same crime numerous times, to wrongly include non-adjudicated and acquitted conduct in the score, and to inadequately allow for the age-crime curve (the older you are, the less likely you are to commit a crime). Again, these flaws can result in the overprediction of potential criminal behaviour, with dire consequences for the offender.

While these flaws are alarming, Dr Hamilton’s purpose is not to reject empirically informed methods in their entirety: “Instead my hope is to reveal, highlight and question the multiple consequences that the past-future orientation has created. The potential reconstruction of an individual’s prior record often may have the unfortunate effect of altering an individual’s future.”

She declares that in the end, while algorithmic risk assessment has a role to play in managing criminals, we must not ignore algorithmic assessments’ capacity to discriminate.

Personal Response

What impact has your research made so far?

Big data and algorithms have a lot to offer society on many fronts. In criminal justice, algorithmic predictions hold the promise of assisting countries in reducing overincarceration while maintaining public safety. This research has alerted policymakers and the public of the potential for bias if these algorithms are trained on mostly white male samples. I am now working with stakeholders to find the right balance between scientific advances and algorithmic fairness for women and minorities.