xAI-EWS — an explainable AI model predicting acute critical illness

The time it takes medical staff to diagnose a patient’s acute critical illness has a pivotal influence on the patient outcome. Acute critical illness usually follows a deterioration of the patient’s vital signs – the routinely measured heart rate, body temperature, respiration rate and blood pressure. Early clinical predictions tend to be based on manual calculations using these clinical parameters that produce screening metrics, including early warning score (EWS) systems such as the Sequential Organ Failure Assessment score (SOFAs).

While Artificial Intelligence (AI) lends itself to producing earlier predictions with greater accuracy than the traditional EWS systems, these black-box predictions are not easily explained to clinicians. This means a trade-off between transparency and the potential power of predictive medicine takes place. Healthcare transparency involves making information on quality, efficiency and patients experience available to the public. This must be reliable and comprehensive in order to inform the choices of patients, providers, payers, etc. to achieve better outcomes in terms of quality of care and its cost. With such high-stake applications, the simpler, more transparent systems are often chosen so that clinicians can easily back-trace a prediction. This strategy, however, can lead to negative outcomes for patients.

Simon Meyer Lauritsen, a Biomedical Engineer and Industrial PhD researcher at Enversion A/S and Aarhus University, together with his co-workers, has developed a robust and accurate AI model capable of predicting if a patient will develop an acute critical illness. Moreover, they have designed this algorithm in such a way that it supports the clinician by providing an explanation of its prediction with reference to the electronic health record data supporting it.

Deep Learning algorithms

Dr Lauritsen and the team explain that in order for clinical medicine to benefit from the higher predictive power of AI, explainable and transparent Deep Learning algorithms are crucial. Deep Learning is a machine learning method that is particularly suitable for big data sets. Deep Learning algorithms deploy artificial neural networks based on the structure and function of the brain and take advantage of copious cheap computation. These algorithms involve large complex neural networks, and their performance will continue to increase as they are trained with more and more data.

For clinical medicine to benefit from the higher predictive power of AI, explainable and transparent Deep Learning algorithms

are crucial.

Previous research into electronic health records-trained AI systems has demonstrated high levels of predictive performance, providing early, real-time prediction of acute critical illness. Unfortunately, the lack of information regarding the complex decisions made by such systems hindered their clinical translation.

Explainable AI early warning score system: xAI-EWS

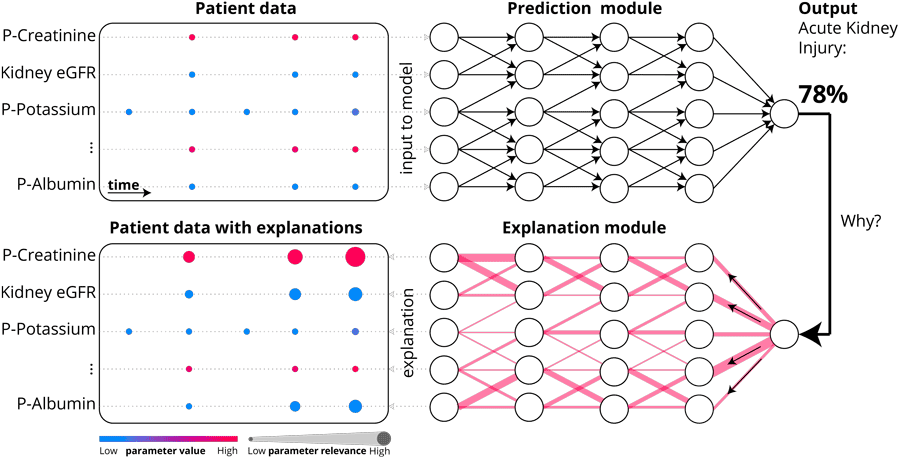

Using historical health data in the form of electronic health records, the research team’s innovative AI algorithm can predict whether a patient will develop an acute critical illness. This algorithm has been trained to recognise cases from the historical data that are similar to the current case. Given the patient’s vital parameters and blood tests, it can identify the early signs of critical illness. It then presents the user with an assessment of when and how to proceed with the patient’s care.

The researchers demonstrate how the explainable AI early warning score system, xAI-EWS in short, overcomes the shortfalls observed in other computational models by taking a Deep Learning approach to analysing a diverse multicentre data set. They used the secondary healthcare data of all residents aged 18 years and over in four Danish municipalities collected over the five-year period from 2012 to 2017. This data was made up of information from electronic health records and included biochemistry, medicine, microbiology, and procedure codes. It was extracted from the CROSS-TRACKS cohort, a population-based Danish cross-sectorial cohort comprising a mixed rural and urban multicentre population served by four regional hospitals as well as one larger university hospital. Emergency medicine, intensive care, and thoracic surgery were among the various departments in each of the hospitals.

p-creatinine, kidney eGFR, p-potassium and p-albumin.

Photo Credit: https://www.nature.com/articles/s41467-020-17431-x

Dr Lauritsen extracted data on all 163,050 available inpatient admissions from this period for inclusion in his analysis. A total of 66,288 individual patients were involved in these admissions. The patients had an average age of 55 years and 45.9% of them were male. The study focused on three acute critical illnesses frequently observed in emergency medicine cases: sepsis, acute kidney injury, and acute lung injury. The prevalence of these emergency medicine cases among the admissions was 2.44%, 0.75%, and 1.68%, respectively. The model parameters comprised 27 laboratory parameters and six vital signs. These features were selected by clinicians specialising in emergency medicine.

Predictive performance

The predictive performance of this digital medicine model was measured in comparison with three baseline prediction models for acute critical illness: the traditional models MEWS (modified early warning score), SOFA, and another AI model, a machine-learning algorithm that has had great results in a previous randomised study.

The clinical usefulness of the xAI-EWS system was evaluated by calculating the net benefit at varying decision thresholds for the model, based on the number of true positives and false positives generated from a sample of cases. This novel approach for early detection had more true positives and fewer false negatives than any of the existing alarm systems. Throughout this comparative study the xAI-EWS system outperformed all three baseline models.

Quality of the explanations

The quality of the explanations accompanying the xAI-EWS predictions was also assessed by manual inspection carried out by clinicians specialising in emergency medicine. The appraisal of the predictions and explanations generated by the xAI-EWS system demonstrate its general clinical relevance. In addition to its high predictive performance, xAI-EWS provides explanations for the predictions in terms of pinpointing the decisive input data which enables clinicians to understand the underlying reasoning behind the predictions.

The researchers emphasise another significant benefit of the xAI-EWS system: the daily workflow of healthcare professionals at hospitals does not have to be altered to take advantage of the system’s predictive power, as the model is based on data that is routinely captured in the electronic health records. Furthermore, while clinicians are entering the raw patient data into the electronic health records, xAI-EWS makes real-time predictions available, improving the timeliness of detection of acute critical illnesses.

The greatest value of detecting critical illness is that potential interventions become timelier and more focused.

Broader impact

The researchers explain that “the greatest value of detecting critical illness is that potential interventions become timelier and more focused”. This means that resources for both treatment and care can be better utilised. Moreover, the affected patients benefit from an improved chance of survival, fewer side effects and increased quality of life.

In relation to the current Covid-19 pandemic, xAI-EWS can help doctors assess if or when a patient will need external ventilation. While Covid-19 is a new disease, impaired lung function is well known, so predictions based on previous data on impaired lung function can contribute to the fast and effective treatment for the patient.

The concept of AI can attract scepticism. How can we ensure that an algorithm is actually doing what we want it to? In this case, the critical illness prediction algorithm ensures that the physician is involved throughout the process. A crucial part of the research team’s work on the development of the xAI-EWS system is xAI – “Explainable artificial intelligence”. From a clinical implementation perspective, it is essential that the algorithm can accurately explain its recommendations and supports the clinical staff with explanations of its prediction. The research team concludes, “in this way, doctors can act on the detection and ensure that patients receive the right treatment. But at the same time [they] also fully understand why the algorithm has calculated a risk. This fundamental, and unique, feature of the algorithm is considered crucial to ensure an optimal clinical translation.”

Personal Response

What inspired you to develop the xAI-EWS system?

<> I have a background in prehospital emergency care, and I’m married to a medical doctor who has worked in the emergency room. We have both experienced how critical conditions can develop quickly and surprise even experienced clinicians. Sepsis alone is the third most common cause of death globally and contributes to 15% of deaths in Denmark. When I was introduced to the possibilities of applying machine learning to health data, it was clear to me that these techniques could make a difference for sick people in need.